Skypoint’s Greg Petrossian, recently joined the Jan 9th 2024 episode of the CanopyIQ Podcast, where each episode explores how digital innovation is transforming the way we think and respond to the rapidly evolving challenges of today’s senior care industry.

Prior to the proliferation of AI, whether he knew it or not, Greg was preparing himself to be positioned as an industry leader. Greg and Adam discuss the importance of quality data in AI, how generative AI could benefit senior living & medical facilities, as well as the new AI “arms race” and whether we need a new governing body.

Here’s a summarized transcript of the episode:

Q: What is it like to be working and what is literally the hottest field in technology right now?

It’s kind of surreal to be here on the edge of technology. We made some great strategic bets both with Microsoft and Databricks and some of our other vendor partners that were really allowed us to meet the hype at the right time. And so to be able to have this opportunity to talk about AI again is just surreal to me, and I love it.

Q: There’s a statement on your website: “We recognize that the future belongs to those who can leverage data and AI effectively.” I always thought of data and AI as sort of the same thing, Can you elaborate on that?

The best analogy I can think of is data as the fuel, whereas AI is the engine and the output. A lot of people talk about the analogy of data is the new oil. And so the engine and the performance of the engine is only as good as the gasoline you put into it. We also hear this analogy a lot in the data world. Garbage in, garbage out. What AI is doing for organizations is really emphasizing or highlighting their areas that they were deficient, such as having clean data, having systems that were able to collect the data that they needed to be able to either make decisions or operate their business.

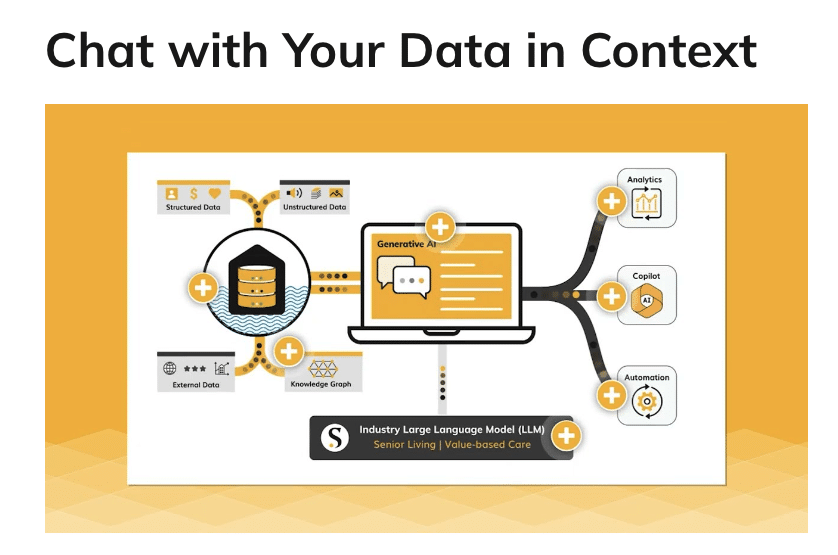

AI is just making that more and more important every day, especially now with generative AI. We have a tagline of “chat with your data.” The only way you’re gonna make generative AI trustable is by having clean data and that’s really what open AI did. They were actually the first one on the block to have the clean data. To make it really functional and then to allow it to be become something that could be a commercial product.

Q: According to senior living news, “83% of senior living executives believe data and AI will play an essential role in the industry within five years but only 12% actually have a plan to on board AI to integrate their systems.” Is that a catastrophe waiting to happen or is it a gold mine waiting to be mined?

Honestly, it’s a tale as old as time. We want to do this (AI) thing, but we have no idea how to do it. And we have no plan and we’re just going to throw ourselves at it. I can always appreciate the enthusiasm and the confidence of organizations, but also when everything is hinging on how competitive your organization can be in the market and how the market is not very forgiving—for organizations that fall behind this isn’t a fly by the seat of your pants thing. This is your data we’re talking about. This is the future of your organization and I see too many times an organization says, “we’re going to try and do this ourselves, we’re gonna figure it out”. And then within six months, they’re calling back and say, OK, we screwed up. We wave the white flag. Help us get started.

I’m hoping more organizations are willing to do that planning piece up front and it’s all about what you are trying to achieve and the business cases. Because if you don’t do that, then we’re throwing darts with a blindfold on, and you’re building infrastructure and solutions that don’t actually get you to the next rung of sophistication.

Q: “Skypoint’s AI platform consolidates fragmented data enabling you to chat with your data sources grounded in context.” Can you explain that in layman’s terms?

What we’re doing at Skypoint is bringing industry context to this generative AI agent. What does that really mean? Think of using ChatGPT. It’s a commercially available agent that has very general knowledge of a lot of things, it’s as wide as it is deep.

But do you know my business? Do you know how we run things? Do you know the difference between skilled nursing facility (SNF) and Sunday Night Football? It might need a little bit more context to get the exact right answer for you.

We have brought what we call a “corpus” of knowledge. Think of it like libraries of knowledge— we’ve reduced that library of knowledge down to the most pertinent facts and policies and history around senior care and senior living and augment that generic knowledge.

Then, in addition to augmenting that generic knowledge, we bring the business context of the organization along. The data systems, the policies, the procedures of the business. That AI agent needs a way to access that. To access that, you must either create data structures or you must provide documents, images, different types of files in a consumable format for that AI agent to be able to respond to your questions and the reliable and consistent manner.

Q: Where do you see this all going in terms of corporate governance, government oversight and regulation? Obviously the healthcare industry is particularly sensitive to data, HIPAA compliance. What are the risks and what are the best ways to mitigate those risks?

Both healthcare and the government are two steps behind. Healthcare recently pushed for FHIR (Fast Healthcare Interoperability Resources) and FHIR-based APIs and capabilities as ways of integrating data between systems. It caused everybody to spend all this money to stand up these FHIR APIs. And we’ve already moved on from APIs to data sharing. Privacy and the compliance factors, I think we’re still trying to catch up with the modern technology.

At first, how do we programmatically keep a patient’s data secure via an API? Now how do we programmatically keep patients data secure in an AI agent? We’re going to need policies, and understand how organizations are going to best abide by whatever the ruling bodies are going to bring to the table.

Overall, what we’re doing is the same types of ways that we have controlled and secured data in the past and just more traditional matters. It’s still the same way we’re doing it today with Role based access or, access-based security.

It’s the same thing you do with generative AI. Based on who I am in the organization, where you report to geographically, where I am, make sure you’re filtering all the data and the prompt answers that I have access to only what I should be able to see and speak to.

That emphasizes the need for organizations to have secured their data more upstream. Whether it’s at the data warehouse level or having a global data governance program, things that organizations were just starting to lean into.

So as organizations are investing their technology, so much is changing — part of me feels bad. You just finished building this thing, and we’ve already moved on from that.

I think the speed and agility is really important in the technology space and I think that’s also why it’s really important to work with partners that can keep up with the pace. Whereas most organizations just really focused on their core competency and technology becomes an outlier of that.

Q: I was curious about a comment you made, which is moving beyond APIs. That’s new to me.

I was just having the same talk earlier. There was a company that is trying to integrate and create a platform for data – everything is going to be provided through an API.

Now that’s great if you’re building an application, but as data is becoming the new oil and we need really easy ways for providing handshakes between organizations to share data, data sharing capabilities that have become invoked through technology companies like Snowflake and Databricks, I really believe that’s the future.

We’re starting to see the technology space standardize around type formats of data, Delta format. We’re seeing iceberg data formats get embraced because these open data formats allow for data to be more freely passed between organizations with all the security and compliance coming along with it. That is the big leap that we’re making is no longer is data locked up between vendors and different clouds and different systems. There truly is going to be a standardized layer that data can be passed around with context, structure and security altogether.

Q: Where do you see that aspect of modeling going in terms of where human behavior is actually going to predict outcomes, and we’re going to be able to start modeling solutions based on predicting exactly what is going to transpire based on averages and data flows?

What generative AI is doing is helping us bring down the cost, time, the effort of being able to build predictive models. What you’re describing is based on a number of inputs. Can you predict an output? That’s what traditional AI has been really focused on. What is the probability of this part breaking so we can go proactively fix it? A consumer clicked on this link, and they’ve looked at this item, the probability of them buying is pretty high. Send them a coupon.

That traditional AI has been so expensive and sophisticated to do. It’s been out of reach for a lot of organizations. One of the first use cases that were commercialized was helping developers write code better, stronger, faster.

If generative AI is able to write really efficient SQL to help query a system, can generative AI help me build some machine learning models? Or help me point in the direction of the type of models that we should be building? Or maybe it’s looking at and finding patterns that we never would have seen. It can help us get to that point of finding opportunities for traditional AI faster.

We’re getting closer, but what organizations are still grappling with is how do we use generative AI for the more simpler use cases? Generative AI is helping us leapfrog to that point, it’s helping us focus on what I call “eating your vegetables”. Your data governance program and keeping your data clean before you get the desert of the insights.

We’re going to see more traditional AI, just more quickly and cheaply built and be part of our daily lives.

The one caveat I want to mention though is I always talk about how I believe traditional AI has been deployed in ways, and that has caused impact.

Think about, credit scores. There were AI models that were impacting people’s lives that had innate bias in it, and it was going largely unchecked. these AI models are kind of a black box.

What’s really cool about generative AI is although you can’t see its brain thinking, you can see why it did something or how it did something.

I’m hoping that we can tease out why they’re making certain predictions. you can read the writing as to how an AI agent has built a certain model and the why, starts helping you give some understanding as to where there could be bias built in or where the data could be deficient. I think that’s where we’re going to see a golden era, not only of generative AI, but also traditional AI.

Q: There are worries that AI is going to become too intelligent, too human like, and then the genie is out of the bottle. Am I capturing that correctly or is it really more of “we’re all in this together”?

For me, who works in (AI) every day, although it can pass the Turing test and seem like it can speak to us really eloquently, it’s still pretty dumb. It’s not like it’s coding itself.

We’re doing a lot of human work to make even pretty simple use cases somewhat consistent and productive.

There’s a lot of sensationalized news out there about generative AI. I think a lot of people get scared when you see something that can speak so naturally get something right. That’s scary, but again, it’s still patterns and data on the back end.

Now a comment I’ve heard from somebody recently: “what happens when AI can be intuitive and it can start doing things maliciously?”

AI is not a human, first, and it can’t intuit, it can’t infer, everything’s a pattern and it can go through realize patterns. Maybe it can figure out elementary arithmetic, but that doesn’t mean it’s going to suddenly become sentient.

Let’s not get too sensationalized around where we are today. I do agree with you. It is an arms race for who’s models get commercialized. Because that’s really what these cloud and technology vendors want. I want to have the best model that everybody’s going to go build their product on.

We have components of ChatGPT because it’s the best. It’s what we were able to just very quickly glom onto and build anchors around and build product with. Everybody wants to be that foundation and wants value creation companies like myself on top of their stack because that’s how they get paid.

We absolutely need a governing body, but I also think we need a governing body that understands the difference between the sensationalized news and what is happening behind the curtains. That takes technology leaders who are leaning into and keeping up to pace with what’s going on with AI. What are the type of breakthroughs that we should take a pause on and what type of breakthroughs are really just kind of a trick?It’s almost like we’re being fooled by how poetic ChatGPT can be, but then when you start trying to feed your data into it, everything starts breaking down real fast.

We do have still a long ways to go with AI. We’re nowhere near some of the doomsayers believe we are, but it’s definitely going to change the landscape and I think that’s what people are afraid of.

How does this change my day to day? How does it change the way I work and when something is being introduced that hits on those notes? Yeah, we’re scared, but what I tell people is those that are going to be embracing productivity tools are always the one that ends up on top. These are enabling technologies for humans. They’re not replacing humans.

We see copilots in the aviation space, but they’re not the ones flying the plane. AI isn’t smart enough to do all of that. Yes, my Tesla can drive itself, but there’s certain things that I as a human can intuit or I can take over.

And just like I was talking about those black box AI applications, we need a human in the middle. We always will. Even as a leader in the AI and data space am very much a proponent of making sure there is a human in the driver seat.