Back in late May, Microsoft introduced a new feature in Power BI called Datamarts.

This feature allows advanced business users to create their own Azure SQL database that automatically generates a Power BI dataset. Users create this database using just the web with little to no coding.

With Datamarts, you can create a tool that lands somewhere in between a Dataflow and a standard Power BI desktop dataset. This tool allows departments to bring together related data with minimal support from IT. Users can even lock down business logic in the semantic layer to ensure there is “one version of the truth”.

Should you use this feature today? Honestly? Probably not.

Should you use this feature six months from now? You might be a fool not to.

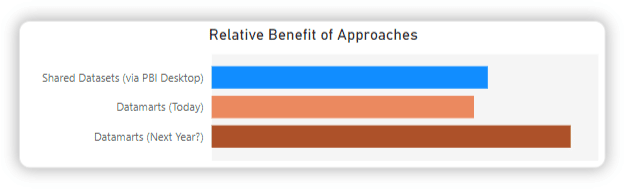

This technology is extremely promising, but at the moment the use cases for it are quite limited. Its biggest problem is that today, most of what it does is better addressed with standard Shared Datasets built-in Power BI Desktop.

Even with its current limitations, you should definitely be paying attention, because Datamarts has the potential to be very powerful in the near future.

The term “game changer” often gets thrown around for things that emphatically, change no game nowhere. With a few tweaks, Datamarts could indeed change the way we play the game of Power BI.

Leaving Cache on the Table

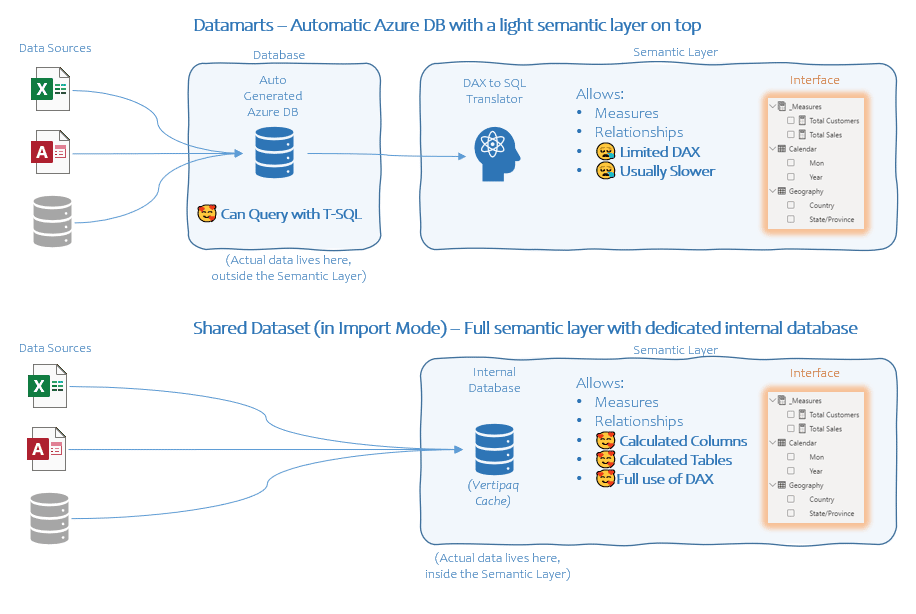

Today’s Datamarts are an easy way to create a database with a light semantic layer on top.

Let’s pull apart the terms “Database” and “Semantic Layer:”

Database

The Database is the mechanism that holds the tables of data together in one place. You can query these using SQL (T-SQL specifically), and you have incredible flexibility in terms of how you write your queries.

Semantic Layer

A Semantic Layer is a layer of metadata on top of a database. It forces everyone to agree ahead of time on how to connect/join tables together (Relationships), and how to answer any particular business questions (Measures).

In Power BI, you query these with DAX rather than SQL. Semantic Layers are designed to make it easy for humans or programs like Power BI to write lots of ad hoc queries that use consistent business logic and enforce one version of the truth.

In some Semantic Layers, such as a regular Power BI Dataset, the database that the metadata sits on top of is actually an internal dedicated database buried inside the Semantic Layer. This is not accessible to outside users.

Here, the copies of the source data are stored in a table format optimized for fast and flexible querying with the DAX language. When people say the data is “cached” inside Power BI, this is actually what they mean.

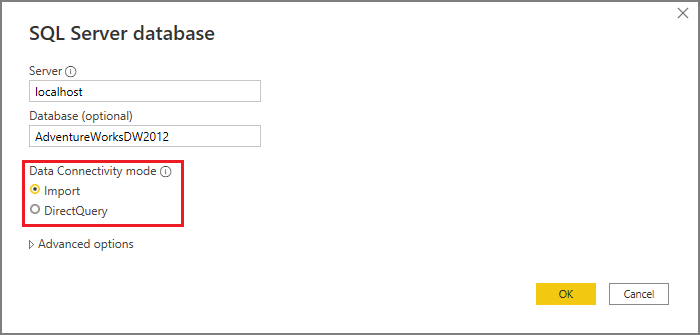

You can disable this in standard Power BI by switching from “Import Mode” to “DirectQuery Mode.” In the latter, no dedicated copy of the data is stored in the Semantic Layer and so the data has to live in a separate “normal” database. In this mode, DAX queries are translated into SQL and get run on the separate database the data lives in.

This has some benefits but is often noticeably slower. You can’t write nearly as complex DAX code, as things that can’t translate from DAX to SQL aren’t allowed.

Pivoting back to Datamarts (pun very much intended): The biggest issue Datamarts has today is that the Semantic Layer sitting on top of the Database is in this “DirectQuery Mode,” rather than “Import Mode.”

Conceptually, there’s something nice about this. The data is stored in a “normal” database, which anyone can write queries against with good old T-SQL. At the same time, a Semantic Layer is tightly bound to it, nudging people to lock down business logic with Relationships and Measures.

The problem is that DirectQuery Mode, in particular the flavor being used today, comes with some heavy costs. It’s generally slower, the DAX that you can write is limited, and you can’t create DAX calculated columns or calculated tables.

In fairness, for small to mid-sized datasets, a lot of these costs are pretty minimal in practice. However, they are costs that you don’t have with standard Shared Datasets built-in Power BI Desktop. Therefore, these costs must be offset if people are going to switch to this new feature.

So if you’re willing to incur those admittedly modest costs, what do you get out of it?

- You can query the data using T-SQL rather than DAX.

- You can easily query the database data with an integrated web Power Query editor.

- You can build these entirely via the web, which is great for Mac users.

These are all nice, and are built on some very cool tech innovations, but how are Datamarts in terms of business value? They’re marginal at best, and not sufficient enough to steer people away from what they’ve been doing for several years now.

I can see some benefits here.

For example, an IT department that has budget Excel worksheets emailed to them every few weeks and then imports them through an SQL server will likely benefit from Datamarts. Is this worth pivoting to a new technology though? Probably not.

Right now, I would recommend using normal Shared Datasets built-in Power BI Desktop over Datamarts. The modest benefits are outweighed by the slightly less modest costs—but that’s just today. There’s a whole other story here if you know where to look.

Back to the Future

Okay, so maybe using Datamarts has some drawbacks today. But perhaps a better way to think about Datamarts today is as an early beta release of something really, really cool.

Today they are like a standard Shared Dataset but harder to build, cost more (you need Premium or PPU to use them), and are probably at least a little slower. They don’t offer a huge value proposition at the moment.

However, there are a ton of cool technological components already visible if you are looking. We can imagine where this is all going once Datamarts crosses a few technical hurdles.

First, the documentation for Datamarts suggests that the Direct Query Mode may just be a preview feature. Once we have the option for Import Mode available, the scales might start tipping away from standard Shared Datasets in favor of Datamarts. It’s very possible that Import Mode could be made available in just a few years.

This would create a scenario where the exact same data would be stored twice. In the:

- Azure DB

- Semantic Layer (Azure Analysis Services)

I think this would still be worth it in many cases. Eventually, it would be great if the Semantic Layer could just handle a subset of T-SQL natively. But that’s even further down the line.

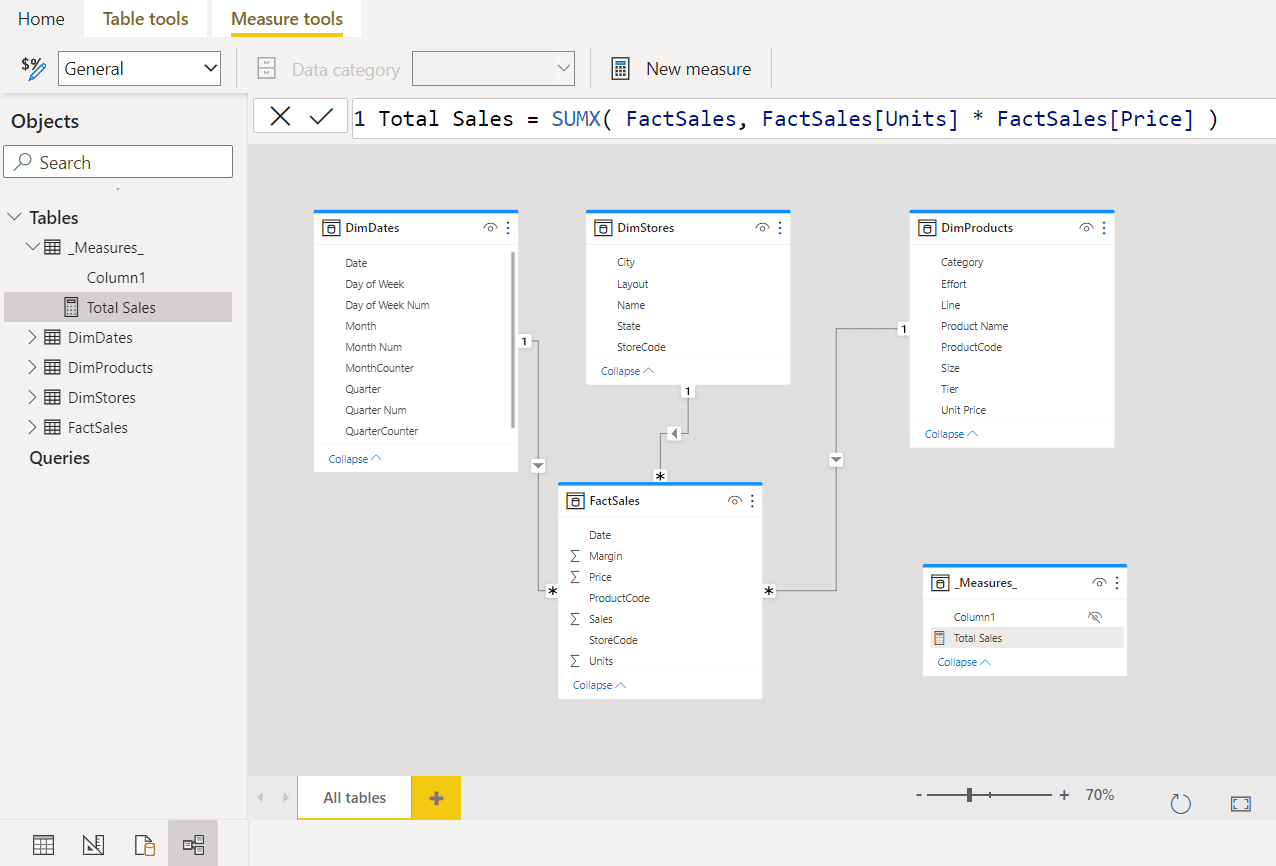

Once that’s done, two features that are already there today suddenly become very appealing. If you’re like me, the features that stood out most in Datamarts were the web versions of both the Power BI modeling tab and the Power BI DAX formula bar.

Those two things alone are huge. They have only ever existed on desktop, so seeing them on the web is a big deal. Paired with a proper Import Mode, we would suddenly be very close to a pure web modeling experience.

This means when a department or IT wants to build a Share Dataset, rather than building in Power BI Desktop, they can do the whole thing on the web. Users will be able to skip the part where a PBIX file is published with a Dataset that everyone needs and a weird blank Report that everyone is confused by.

Because of the way data is stored in PBIX files, traditional Power BI source control has always been clunky at best.

Because Datamarts only concern themselves with the Dataset (and not the Reports, which are what hold the visuals), this means Datamarts might allow for proper source control in Power BI.

Let me say that again…Datamarts could usher in proper source control in Power BI. This would easily make these the preferred way to build common-use Datasets.

Further, it’s entirely possible that source control could be largely automated in a way that satisfies both business users and IT.

It could be possible to export a .BIM file and check it into GIT. We may also see something similar to automatic version control in Google Docs with built-in revisions tracking, which would highlight changes between different versions of the same document. We may even see both of these things, which is huge.

Additionally, because this is web-based, it is possible for multiple developers to work on the same solution at once. This could make working on a Datamart similar to working on a Google Doc with easy fluid collaboration.

Imagine several DAX developers building out measures at once while business users are filling out descriptions and tweaking formatting strings. The efficiencies gained here would be huge. Build and repair times would drop very quickly, which means more labor hours would be dedicated to doing actual work, and less burned up by coordination and hurry-up-and-wait workflows.

In addition to all of this, we can imagine more full Power BI Desktop integration. Users would be able to access tables in the Datamart just like they would a Dataflow. They can do that now by connecting with the Azure DB connector, but this is too tricky for most users and the experience from Dataflows can literally be copied here and make for a great experience.

In my limited experience, what most folks want is to connect to a Semantic Layer with T-SQL. When I dig deeper, almost every time they just want to select to extract a handful of tables with maybe a WHERE clause. Said otherwise, they just want to pull out whole tables with the occasion filter. Making this easier would be very convenient.

Together, these allow Power BI to hit an amazing sweet spot. Business users could build shared datasets entirely on the web with built-in source control. With that, the game would indeed be changed.

None of this is guaranteed and no one at Microsoft is whispering into my ears. But while I don’t have a lot of use for Datamarts as they exist today, the technology that showed up with them can’t be a coincidence. I think big things are coming with Datamarts, and I am very much here for them.

Learn more about our Power BI Capabilities, private training sessions, or our free monthly Dashboard In a Day workshops.